Click, clique, radical chic

Some videos on the internet are even naughtier than others. Thomas Frissen researches if the flood of information, ideas and visual stimuli digital technologies expose us to could help radicalise individuals and polarise societies.

To start with the punchline: no, it’s not all the internet’s fault. “Digital technology makes ideas more accessible – also bad ones,” says Thomas Frissen, Assistant Professor in Digital Technology and Society. However, the algorithmic architecture of big social media platforms means that we end up in filter bubbles, i.e. ideologically consonant spaces where our opinions are only ever reinforced.

This, in turn, potentially leads to polarisation and radicalisation. “You’re always just a few clicks away from extreme content. You start with a YouTube video about whether you should stretch before or after jogging, the next suggestion is about marathons, you click and the next suggestion is about ultra-marathons across the Mont Blanc…”

In the chokehold of attention-grabbing

Social media hasn’t created this problem ex nihilo; it has skilfully hijacked the human predisposition towards desensitisation. Frissen cites a study from 2016 about the YouTube algorithm and radicalisation that showed that following the suggested videos next to Trump rallies quickly led to Neo-Nazis or deep state conspiracies. That is not to say, of course, that the designers responsible would favour such ideas.

The business model of digital platforms is to monopolise and monetise your time. The algorithm, supported by a sheer unimaginable amount of data generated by you and users like you, merely gives you what you agree with but what is outrageous enough to capture your attention. Dialling up the nuance on an argument would lose viewers – dialling up the volume ensures they stay stimulated.

‘Before the bomb goes off’

So while the filter mechanisms favour extremes, do they play a role in radicalising people? And what is radicalisation anyway? “It depends on who you ask: a legal scholar might say it’s about breaking the law, a psychologist might link it to a violent worldview… I see it as a gradual process of adopting violent behaviour. Cognitive radicalisation can occur simultaneously, but not necessarily at the same speed.”

Frissen traces the origins of the term as we use it now to the beginning of this century and a series of terrorist attacks inspired by Salafi-Jihadism. “In policy documents, e.g. from the CIA, you hardly see the word ‘radical’ mentioned before. But after the attacks it became associated with the process of going from a well-integrated Muslim to terrorist – in academic literature, it was described as ‘what happens before the bomb goes off’.”

All roads lead to the fringe

The literature has evolved a good deal since then. For a start, the concept is no longer limited to an Islamic context. “Research shows that most radicals do not engage in terrorism – and that not all terrorists are radicals.” None of which is to ignore the subjective element in moral judgements: one man’s terrorist is another’s civil rights hero.

If extremism, broadly defined is about occupying a dogmatic, ideologically uniform space at the fringe of a spectrum, one could see how social media would be conducive to that end. “In a sense, digital platforms provide echo chambers without much room for diversity.” Algorithms curate a seemingly open space for subjects sharing an ideology, vying for attention through one-upmanship – that goes for CrossFit and the ketogenic diet as much as for white supremacy.

Thomas Frissen is Assistant Professor in Digital Technology and Society at the Faculty of Arts and Social Sciences (FASoS).

Beheading videos unproblematic – sort of

For his PhD project, Frissen went to inner city schools in Brussels and Antwerp with high proportions of pupils with migrant backgrounds to research behaviours prompted by online radicalisation. Two out of five pupils had watched videos of religiously motivated beheadings – which, somewhat counterintuitively, Frissen describes as not a sign of radicalisation.

“It’s a more about morbid curiosity – even if they actively sought it out, it wasn’t correlated with radicalisation. Other factors, like previous criminal behaviour are much more significant. There is broad consensus among experts that there’s no standard psychological profile for terrorists – which makes early detection very difficult.”

Glossy, scholarly and violent

Frissen also conducted qualitative research on IS-produced magazines, which he describes as flashy and visually very impressive – but a rather dry, scholastic affair. “I collaborated with an Islam scholar who confirmed that they are very well researched. They are very violent but on the whole extremely boring – it’s mostly about developing moral arguments from religious scripture.”

Bracketing the societal and cultural conditions conducive to radicalisation, Frissen points out that “digital media facilitate targeting susceptible young people, but there was no correlation between coming across content in your feed and radicalisation.” There was a correlation between seeking out those magazines and radicalisation, but Frissen concedes that they might be an indicator rather than a catalyst.

It’s a heavy and challenging subject to work on but it hasn’t changed him too much – with one exception. “I was clean shaven when I started my PhD on radicalisation – by the time I defended the thesis I had a full beard, which raised a lot of concern,” Frissen laughs, but then adds how that is indicative of how radicalisation is conflated with cultural symbols in the news.

Lean mean meme machine

Since then, Frissen has moved on to studying polarisation driven by digital technology, in particular synthetic media such as deep fakes and memes. While that sounds like a project made up on the spot by someone caught procrastinating in the office, its relevance can hardly be overstated.

“Synthetic media are media artefacts that have been generated or manipulated using artificial intelligence (AI) – generative adversarial networks (GANs) in particular. Machine learning and digital tools are improving so fast that anybody will be able to create footage that humans will struggle to identify as fake. Imagine the power of this – it’s a serious threat to democracy.”

“You have some really quite mad ideas and media that start out on the darker fringes of the web, like 4chan or Reddit. Some gain traction in those bubbles and are shared on Facebook. If it is outrageous enough to provoke reactions, the algorithm promotes its spread – without verifying its authenticity. A recent study shows that articles linking 5G to the spread of corona create almost ten times the engagement than reputable coverage on Facebook.”

A dilemma for established media outlets: if synthetic media, e.g. a deep fake of a celebrity or a politician is the talk of the town, they have to cover – even if only to debunk it. But by doing so, they legitimise the argument and further reinforce its spread. This in an incredibly complex and diffuse process but synthetic media such as memes provide a excellent way of tracing the early spread of ideas across the internet.

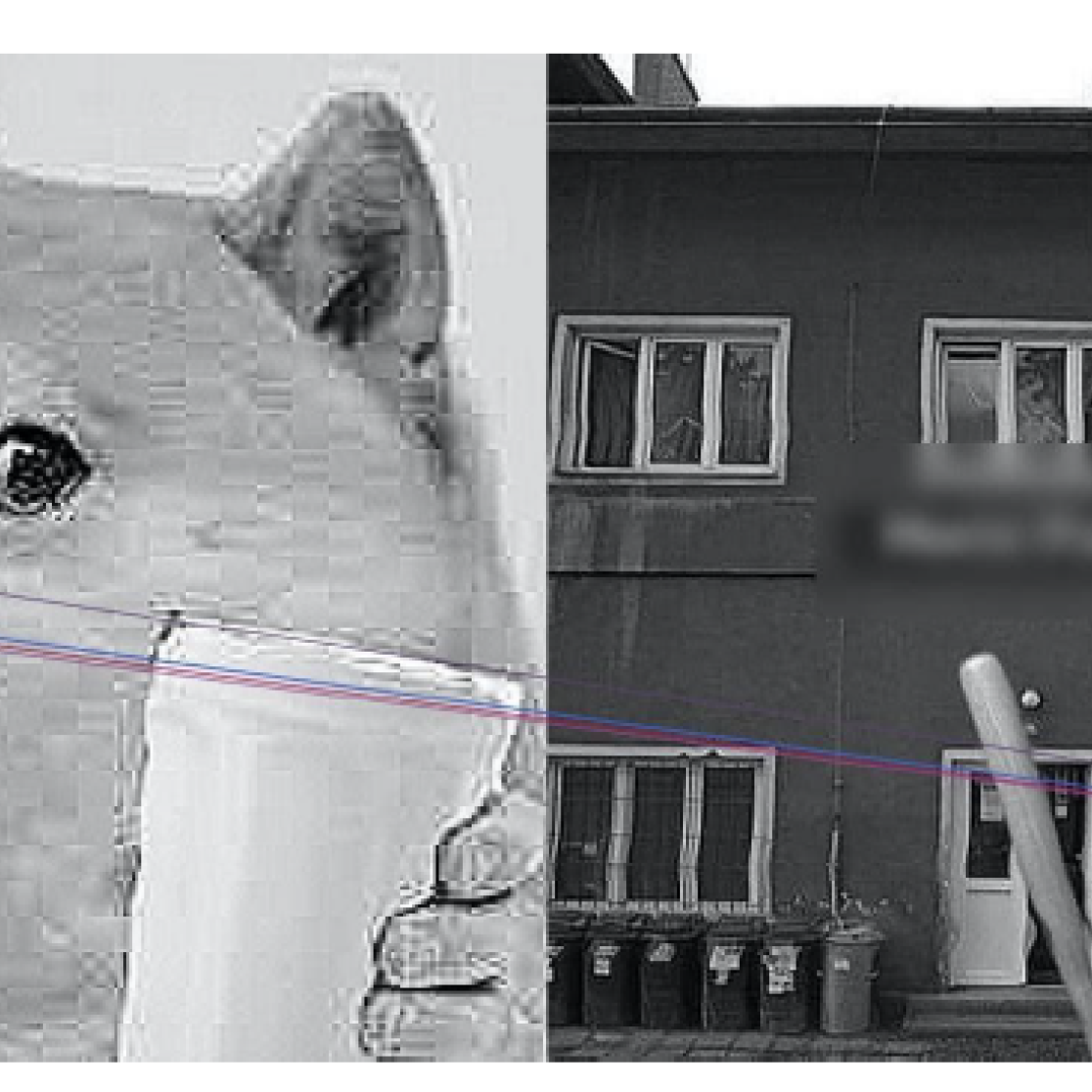

Studying their evolving use might sound as ambitious as counting fish in the sea, but Frissen embraces digital methods. He has developed an algorithm to detect similarities and key features, which can trawl the internet and collect all iterations of the same synthetic medium, whether it’s rotated, mirrored or altered. This new method then allows for the qualitative study of the evolution of those memes. “I’m very excited by the method itself but also by all the research it will facilitate,” says Frissen before sending another meme.

Frissen's algorithm in action; from his study with Cedric Courtois (University of Queensland)

Also read

-

Flour, family, and forward thinking: the evolution of Hinkel Bäckerei

In the heart of Düsseldorf, the comforting aroma of freshly baked bread has drifted through the streets for more than 130 years. Since its founding in 1891, Hinkel Bäckerei has evolved from a small neighborhood bakery into a cherished local institution.

-

Contribute to a Voice for Children in Conflict Areas

Dr Marieke Hopman and Guleid Jama are launching a new research project on the role of children in peacebuilding in conflict areas.

-

Administrative integration through agency governance The role of Frontex, the EUAA and Europol

PhD thesis by Aida Halilovic