Auditory Perception and Cognition

Our research group studies how people hear. We investigate mechanisms in the human brain that underlie auditory perception and cognition. We aim to utilize this knowledge in practical and clinical applications.

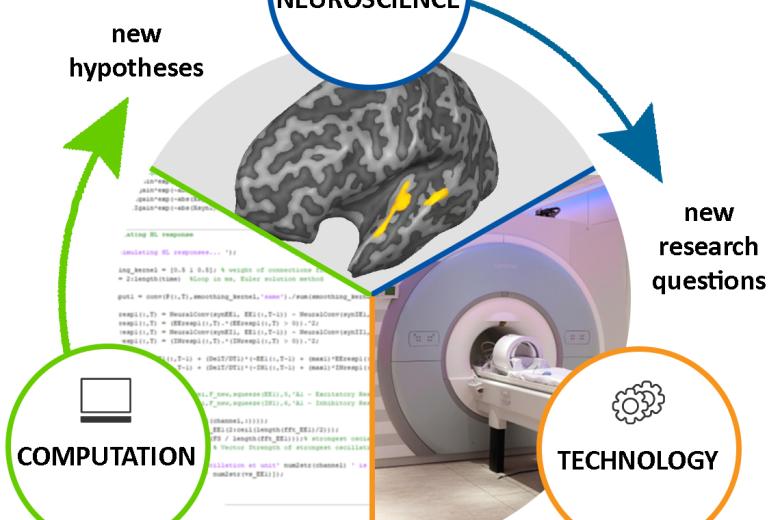

We use a multi-disciplinary experimental approach integrating:

- Non-invasive measurements of brain activity at high spatial (fMRI at 3T, 7T and 9.4T) and high temporal (EEG) resolution

- Psychophysics

- Non-invasive electric brain stimulation

- Multisensory stimulation (in standard lab or virtual reality settings)

- Computational modeling of auditory processing

- Advanced data analysis techniques (e.g., statistical pattern recognition and machine learning methods)

Looking for an internship? − Please read more about our research topics below and contact us!

International Conference on Auditory Cortex

In September 2025, we hosted the 8th ICAC in Maastricht. You can still visit the conference website here.

AuditoRy Cognition in Humans and MachInEs (ARCHIE)

A bird chirping, a glass breaking, an ambulance passing by. Listening to sounds helps recognizing events and objects, even when they are out of sight, in the dark or behind a wall, for example. Despite rapid progress in the field of auditory neuroscience, we know very little about how the brain transforms acoustic sound representations into meaningful source representations. In this research line, we develop neurobiologically-grounded computational models of sound recognition by combining advanced methodologies from information science, artificial intelligence, and cognitive neuroscience.

Examples of ongoing projects include:

- "Sounds" ontology development (in collaboration with prof. M. Dumontier’s IDS): “Sounds” is an ontology that characterizes a large number of everyday sounds and their taxonomic relation in terms of their acoustics, of sound-generating mechanisms and semantic properties of the corresponding sources.

- Deep neural networks (DNNs) development: We are developing ontology-based DNNs, which combine acoustic sound analysis with high-level information over the sound sources and learn to perform sound recognition tasks at different abstraction levels.

- Cognitive Neuroimaging of sound recognition in humans: We measure behavioral and brain (sub-millimeter fMRI, iEEG, EEG, MEG) responses in human listeners as they perform sound recognition tasks. Then, we evaluate how well DNN-based and other models of sound processing explain measured behavioral and brain responses using state-of-the-art multivariate statistical methods.

Principle investigator

This research line is directed by Elia Formisano, Maastricht University (funding: NWO Open Competition SSH, Aud2Sem) and Bruno Giordano, Institut de Neurosciences de La Timone (funding: ANR AAPG2021, SoundBrainSem project) in close collaboration.

Auditory Plasticity in Health and Disease

The focus of the research line auditory plasticity in health and disease is to study rapid and long-term changes in sound processing in the human brain. These changes may be adaptive (such as in attention and learning) or maladaptive, such as those underlying tinnitus. We take a systems biology approach to study these changes. That is, we combine data across spatial scales (e.g. ultra-high field MRI data, genetics) with computational modeling to answer our research questions.

Principle investigator

Michelle Moerel

Cortical Correlates of Listening to Naturalistic Sounds

The focus of Cortical Correlates of Listening to Naturalistic Sounds is investigating the brain processes underlying the listening of natural sounds like speech and music when presented in isolation and – as often the case – simultaneously with other sounds. We measure brain activity with high-field fMRI, EEG and their combination while participants listen to such natural sounds and perform listening tasks. This complementary set of data is analysed using multivariate decoding and encoding approaches (like MVPA, speech tracking). This line of research spans topics like auditory scene analysis, the fate of non-relevant or unattended sounds and their dependency on listening demand. With this approach, we hope to better understand how the human brain supports its unique listening capabilities in close to real-life settings.

Keywords: auditory scene analysis, selective attention, naturalistic sounds, high-field fMRI, EEG, multimodal imaging (EEG-fMRI), stimulus reconstruction, sound/stimulus tracking, decoding, encoding, speech, music

Principle investigator

Lars Hausfeld

meSoscopic Computational AuditioN lab (SCAN)

In our lab, we aim to understand how feedforward and feedback processing allows our brain to extract information from sounds. A particular focus is on how and where along the auditory pathway these processes contribute to forming predictions of what we are going to hear next. To understand the computations carried by cortical layers and columns as well as subcortical structures, we develop approaches using a variety of methods. We use mathematical and computational models of auditory perception to form hypotheses about the algorithms carried out by neural populations.

We develop computational neuroimaging tools to link these models to measures of brain activity. To achieve the necessary spatial and temporal resolution to study these computations, we exploit the high spatial resolution afforded by ultra high magnetic field functional imaging (fMRI) and the high temporal resolution attainable with non-invasive approaches such as electroencephalography (EEG) and magneto-encephalograpy (MEG). These approaches are geared to understand behaviour, which we study with auditory psychophysics.

Principle investigator

Federico De Martino

Multisensory Integration and Modulation (MIM)

The primary goals of the Multisensory Integration and Modulation (MIM) lab are to:

- Control human brain activity with sensory and electrical stimulation, and

- Utilise this control to:

- Identify brain-activity patterns underlying specific sensory, cognitive, and motor functions

- Support these functions in health and disease

We pursue these goals using a multi-methodological experimental approach that combines multisensory (auditory, tactile, and visual) and non-invasive electric (transcutaneous and transcranial) stimulation with simultaneous measurements of behavior (psychophysics and subjective reports) and brain activity (electroencephalography, functional near-infrared spectroscopy, and functional magnetic resonance imaging). We apply this approach in healthy participants and patients with disorders of consciousness.

Principle investigator

Lars Riecke

Statistical Modelling and Machine Learning for Neuroimaging data

The focus of this research line is to aid and improve neuroscientific investigation developing and leveraging advanced statistical and machine learning models.

The use of complex data, such as those obtained with fMRI and electrophysiological data, poses several methodological challenges with respect to modelling, analysis and interpretation. It is therefore of great importance to use analytical approaches that can account for such complexity and correctly and efficiently extract information. In this research line, we focus on the tailoring and optimization of machine learning models, including but not limited to Deep Neural Networks, to extract information from neuroimaging data and to test hypotheses. To this avail, we employ both parametric models, with particular emphasis on Bayesian models, and non-parametric models, with emphasis on permutation and randomization tests.

Principle investigator

Giancarlo Valente

Section members

- Michele Esposito

- Agustin Lage Castellanos

Alumni

- Niels Disbergen

- Mario Archila Melendez

- Michele Svanera

-

Research lines

-

AuditoRy Cognition in Humans and MachInEs (ARCHIE)

-

Auditory Plasticity in Health and Disease

-

Cortical Correlates of Listening to Naturalistic Sounds

-

meSoscopic Computational AuditioN lab (SCAN)

-

Multisensory Integration and Modulation (MIM)

-

Statistical Modelling and Machine Learning for Neuroimaging data

Members

Current members

- Andra Elvika (secretary)

- Elia Formisano (Full professor)

- Federico De Martino (Full professor)

- Fren Smulders (Associate professor)

- Giancarlo Valente (Associate professor)

- Joost Haarsma (Assistant professor)

- Jorie van Haren (PhD Student)

- Lars Hausfeld (Assistant professor)

Lars Riecke (Associate professor)

- Lonike Faes (PhD Student)

- Mahdi Enan (PhD Student)

- Michelle Moerel (Associate professor)

- Maria de Araújo Vitória (PhD Student)

Parisa Naseri (Assistant professor)

Alumni

- Lidongsheng Xing (PhD Student, -2025)

- Xueying Fu (Postdoc, -2025)

- Min Wu (Postdoc, -2025)

- Michele Esposito (PhD, -2025)

- Agustin Lage Castellanos (Postdoc, -2024)

- Isma Zulfiqar (Postdoc, -2023)

- Miriam Heynckes (PhD, -2022)

- Merel Burgering (PhD, -2021)

- Martin Havlicek (Assistant professor, -2020)

- Niels Disbergen (Phd, -2020)

- Shruti Ullas (Phd, -2020)

- Vittoria De Angelis (Phd, -2019)

- Martha Shiell (Postdoc, -2019)

- Valentin Kemper (Postdoc, -2019)

- Julia Erb (Postdoc, -2019)

- Omer Faruk Gulban (Phd, -2018)

- Xu Chen (Postdoc, -2018)

- Kiki Derey (Phd, -2017)

- Sanne Rutten (Phd, -2017)

- Jessica Thompson (Phd, -2016)

- Emily Allen (Phd, -2016)

- Roberta Santoro (Phd, -2015)

- Noël Staeren (Phd, -2014)

- Anke Ley (Phd, -2013)

- Nick Kilian-Hütten (Phd, -2012)

- Aline de Borst (Phd, -2011)

- Hanna Renvall (Postdoc, -2008)