Understanding everyday hearing | Elia Formisano | ERC Synergy Grant

Imagine a radio playing. The first sound you hear is the gentle lapping of ocean waves, followed by the distant chatter of sunbathers and the sudden cries of seagulls. This blend of sounds, known as a soundscape, instantly brings to mind the image of a beach—perhaps even reminding you of a past summer vacation. This example highlights how sounds play a vital role in shaping our mental images of the world around us. Sound usually enhances what we see, helping us recognise objects and events. But it becomes especially important when visual information is missing or when we’re imagining a scene.

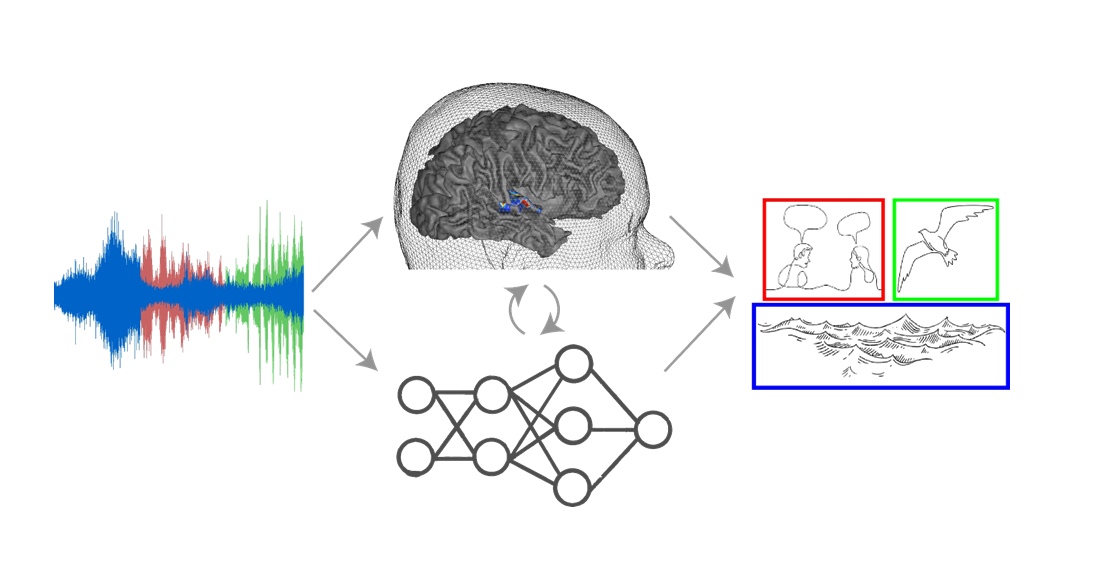

Elia Formisano, professor of Neural Signal Analysis, together with his colleague Bruno Giordano at CNRS, France, have received the highly coveted Synergy Grant from the European Research Council. The project is titled Natural Auditory SCEnes in Humans and Machines (NASCE): Establishing the Neural Computations of Everyday Hearing and they will examine how our brain processes real-world soundscapes, how the different sounds are separated and linked to objects and events. They will examine behavioural and brain responses in human listeners and create AI models that can replicate the observed mechanisms.

Formisano and Giordano have been unravelling the mysteries of the human hearing for a while. Last year, we talked to Formisano about a precursor to this project, where they were discovering how the brain links sounds to object. If you hear a chirp, you think: bird. They also developed AI models to function similarly.

Semantic segmentation

That project looked at sounds coming from an isolated source. “In real life, you almost never hear only one sound. So, with this project we further develop this theory and model to look at how is the brain so good at separating different sounds from one another and linking them to the correct objects”. This quality is one of the first things we as humans lose when ageing, separating sounds becomes harder and harder. “We’re proposing a new theory of how the brain does this, called: Semantic Segmentation”. This grant will allow them to develop and prove the theory.

“We’re actively combining three major disciplines: Cognitive Psychology, Neuroscience, and Artificial Intelligence. Looking at human behaviour, the brain and AI models. In our experiments we will see how the actual measurement of behaviour and the brain compare with the neural networks”.

The link between meaning and processing

“We propose that our auditory cortex works at “object” level. In the example of the beach, the moment our brain identifies the ocean, the auditory resources dedicated to the ocean can be, and will be, diverted to the other sounds, which are typically associated with the ocean (like seagulls, chatter, maybe an airplane). That way, the brain divides the complex sound waves into easily identifiable objects and forms an image of the soundscape”.

In this new theory, Formisano and Giordano talk about the strong link between the meaning of a sound (semantics) and the processing of the sound (acoustics). “If you, for example, hear the trampling of hooves, you will automatically think of neighing, even though these sounds are acoustically very different. Our brain creates the link between the two sounds by meaning. We believe this association helps us to analyse the sounds we hear around us”. This is one of the aspects of our hearing that they want to transfer into the computer model.

In the coming six years, Formisano will use the grant funding to further develop and prove the theory and gather the best people to do this. “I also want to take a moment to thank our team behind the scenes, especially Marcel Giezen and Liane Ligtenberg, who make the difficult process of grant application possible. And, the students and postdocs in Maastricht (Michele, Maria, Gijs, Tim) and Marseille (Christian, Marie, Giorgio) that work with us every day and helped us developing this research theme”.

Also read

-

Teacher Information Points at UM

UM faculties now host Teacher Information Points (TIPs) that offer local, “just-in-time” and on-demand support for teaching staff. The aim is simple: to provide help that is closely connected to day-to-day teaching practice.

-

Dongning Ren awarded ERC grant for her research proposal on ostracism.

FPN colleague Dr. Dongning Ren was awarded a highly competitive ERC grant for her project: Using a principled causal approach for causal queries: the Ostracism Causal Project .

-